Decision Fatigue: Why Your Brain Shuts Down by Evening

There’s a particular kind of tired that shows up in a world of recommendations, summaries, rankings, and auto-complete. Not the tired of doing too much—more like the fog that comes from not quite knowing what you think until something else tells you.

Have you ever felt less certain after getting “the perfect suggestion”?

AI decision fatigue isn’t about being weak-willed or tech-obsessed. It’s what can happen when day-to-day judgment is repeatedly handed off, even in small ways. The nervous system enjoys reduced effort in the moment, but over time it can lose some of the “done” signal that comes from completing choices and living inside their consequences.

AI decision fatigue often feels paradoxical: you have more support than ever, yet your mind feels less steady. People describe a low-grade mental haze, slower confidence, and an impulse to check “one more tool” before committing to even simple decisions. [Ref-1]

This doesn’t mean anything is wrong with your character. It’s a sign that your system is adapting to a changed environment—one where external guidance is always available, and internal judgment isn’t asked to fully finish the loop.

When decisions are constantly “handled,” the brain can start to treat your own judgment as optional.

Your executive system—attention, working memory, prioritization—doesn’t only engage for big life choices. It stays calibrated through ordinary moments: choosing what matters, weighing tradeoffs, holding uncertainty, and closing the decision with a clear internal “yes.”

When algorithms repeatedly take the first pass (what to read, buy, watch, write, eat, reply), that executive engagement can shrink. The catch is that offloading can reduce effort in one slice of time while raising load overall: more options, more comparisons, more second-guessing, and less felt finality. [Ref-2]

What happens when your attention rarely has to “land”?

Humans are built to conserve energy when the environment offers a reliable shortcut. If something seems accurate, fast, and socially validated, the nervous system treats it like a safety cue: “No need to spend extra fuel here.”

This is one reason automation bias happens—our minds naturally lean toward what appears authoritative or machine-precise, especially under time pressure or cognitive strain. [Ref-3]

In other words, reliance is not primarily a psychological weakness. It’s an efficient biological strategy—until it quietly displaces the internal processes that generate orientation and closure.

Uncertainty is metabolically expensive. Holding multiple possibilities, anticipating outcomes, and choosing a direction all require sustained regulation. AI assistance can compress that whole process into a neat answer, a ranked list, or a confident summary.

In the moment, that can feel like immediate relief: fewer unknowns, less friction, less exposure to consequences. But relief is a state change—not the same as completion. When the choice didn’t fully form inside you, the nervous system may not register it as truly “done,” even if the task is finished. [Ref-4]

That’s where fatigue can emerge: not from deciding too much, but from repeatedly moving forward without the internal settling that comes from owning the decision.

Modern tech culture often treats delegation as the highest form of efficiency: outsource the small stuff, preserve your brain for the “important” work. The problem is that autonomy isn’t stored in a separate compartment. It’s maintained by repetition—by choosing, adjusting, and recognizing your own reasoning as real.

Over time, heavy reliance can weaken critical thinking in a very specific way: not by making you less intelligent, but by making discernment feel less accessible under ordinary conditions. [Ref-5]

In an avoidance loop, the system learns that discomfort (uncertainty, effort, ambiguity, social risk) can be bypassed quickly. AI becomes a powerful bypass: it offers an immediate path forward without requiring you to tolerate the unfinishedness of not-yet-knowing.

This isn’t “avoidance because you’re afraid.” It’s avoidance because resistance gets muted. The environment makes it easy to skip the internal negotiation that creates ownership.

Overreliance is a known risk pattern in human-automation interaction: when tools are consistently available and feel authoritative, people can defer even when their own judgment is sufficient. [Ref-6]

AI decision fatigue rarely announces itself dramatically. It often shows up as subtle friction—moments where your mind stalls unless an external prompt appears.

Automation bias research describes how human confidence can shift in the presence of automated recommendations—especially when systems appear consistent or high-status. [Ref-7]

These are regulatory patterns: your system is seeking a stable reference point. The issue is that the reference point is increasingly outside you.

Cognitive offloading—using external tools to reduce mental work—can be useful and normal. The complication is what gets offloaded. If the tool mostly handles storage (reminders, calendars), you may feel supported. If it increasingly handles judgment (what matters, what’s best, what to say), you may feel subtly unmoored. [Ref-8]

Because discernment is not just a skill; it’s an identity function. It’s part of how a person experiences continuity: “This is what I value. This is how I decide. This is the kind of person I am in situations like this.”

When that process is repeatedly outsourced, the nervous system can lose coherence. Not emotionality—coherence: fewer internal anchors, fewer natural endpoints, more reliance on external certainty to feel safe enough to proceed.

Once internal confidence thins, it makes sense that reliance increases. The tool feels clearer, faster, and more decisive than a tired mind. But each time the system defers, the “decision muscles” get fewer reps, and the next moment of uncertainty feels heavier.

This is how a stable loop forms: not because you’re incapable, but because the environment rewards outsourcing with immediate relief while delaying the costs. Over time, the mind may interpret ordinary ambiguity as a sign that something is wrong—when it’s simply a normal part of choosing. [Ref-9]

It’s not that you forgot how to think. It’s that thinking stopped reaching a satisfying end point.

There’s a difference between having an answer and having closure. Closure is the physiological stand-down that comes when your system recognizes: “I chose. I can stop scanning.”

AI can generate answers, but it can’t automatically create that stand-down inside a human body. That settling tends to emerge when your own reasoning completes—when pacing, uncertainty tolerance, and personal priorities are allowed to finish their arc. Research discussions around AI-assisted decision-making increasingly distinguish between reducing effort and supporting human decision quality across time. [Ref-10]

This is the bridge back to agency: not through more pressure or self-improvement, but through restoring the conditions where decisions can fully land as yours.

One of the most underappreciated stabilizers of judgment is dialogue—real-time exchange where your thinking becomes clearer through relationship, context, and paced response. Human conversation carries safety cues that algorithms can’t replicate: mutual understanding, accountability, and the feeling that meaning is being built, not extracted.

In a fragmented attention environment, human dialogue also slows the tempo. That slowdown is not inefficiency—it’s often what allows discernment to complete instead of staying perpetually “almost decided.” Digital attention research highlights how modern platforms can amplify fatigue and dependence through constant pull and rapid gratification cycles. [Ref-11]

What changes when your thinking is witnessed rather than computed?

When decision fatigue eases, people often notice a quiet shift: less compulsion to consult, more ability to choose without perfect certainty, and a smoother transition from consideration to commitment.

This isn’t about becoming more emotional or more motivated. It’s about capacity returning—attention that can hold ambiguity without spiraling, and a nervous system that can register completion. With restored closure, confidence tends to reappear as a byproduct: the sense that your judgment is available again. [Ref-12]

Algorithms are designed to optimize for engagement, relevance, prediction, or performance metrics. Human lives are organized by something different: values, relationships, identity continuity, and the need to feel at home inside your own choices.

When autonomy is restored, decisions start to align with lived priorities rather than constant maximizing. The goal isn’t to reject tools; it’s to keep tools in the role of support, not steering wheel. Cognitive offloading can be beneficial when it frees capacity for what matters—but destabilizing when it replaces the process that makes “what matters” coherent in the first place. [Ref-13]

Meaning isn’t found in the best option. It’s formed when a choice becomes part of who you are.

It helps to name what’s happening: not laziness, not dependence as identity, but a predictable shift in where certainty comes from. When the center of gravity moves outside the self, the nervous system adapts by waiting for external confirmation—and fatigue follows.

Automation bias is one way this shows up: the tendency to defer to automated suggestions even when your own judgment is intact. [Ref-14] In daily life, that can translate into smaller and smaller moments of self-trust.

Agency returns most reliably when life contains enough completion—enough choices that are carried through, digested, and allowed to settle into identity. In that context, AI can remain what it is at its best: a resource that serves your discernment, rather than a source of meaning.

AI can reduce friction, but it can’t live your decisions for you. The steadiness many people are looking for—clarity, confidence, a sense of “I know what I’m doing”—tends to come from a human capacity that strengthens through completion.

When choices are made and owned, the nervous system gets its stand-down signal, and identity becomes more coherent over time. That’s not motivation. It’s stabilization. And it’s why true clarity often grows not from delegating decisions, but from rebuilding the conditions where you can choose and feel finished. [Ref-15]

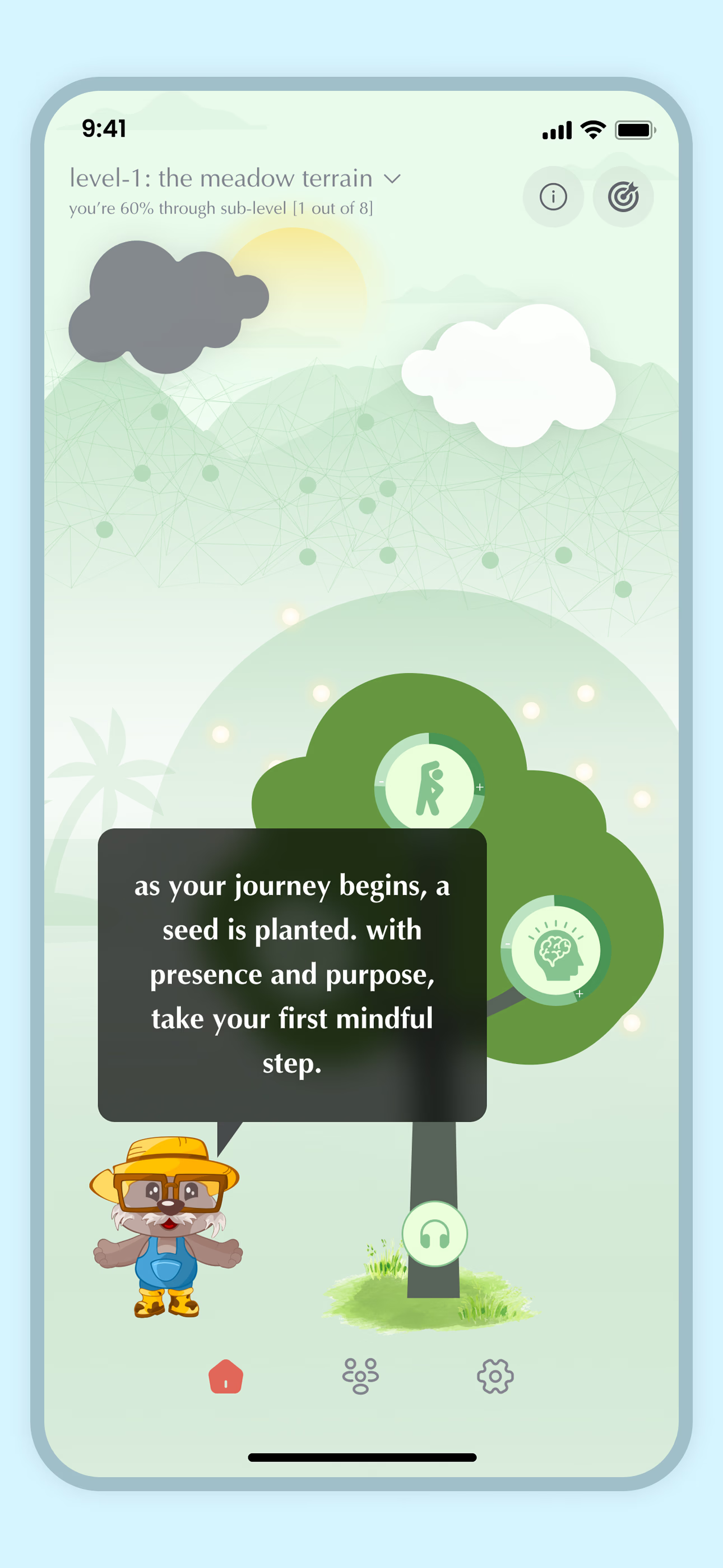

From theory to practice — meaning forms when insight meets action.

From Science to Art.

Understanding explains what is happening. Art allows you to feel it—without fixing, judging, or naming. Pause here. Let the images work quietly. Sometimes meaning settles before words do.